Linux Basics

This post lists essential commands and concepts which would be helpful to a Linux user. We would cover Linux concepts, boot process, file system, GUI, terminals, basic utilities and operations, networking, process, bash and a lot more. We have used different illustrations by Julia Evans that helps to explain few concepts digramwise. Information is also added from the free Linux Foundation - Introduction to Linux course and Kali Linux Revealed 1st edition.

Linux Concepts

Linux is a full multi-tasking, multi-user OS with built-in networking and service processes known as daemons.

Terminology

Kernel

The kernel is considered the brain of the Linux OS. It controls the hardware and makes the hardware interact with the applications. Example: Linux kernel.

Userspace

User space (or userland) refers to all code that runs outside the operating system’s kernel.

User space usually refers to the various programs and libraries that the operating system uses to interact with the kernel: software that performs input/output, manipulates file system objects, application software, etc.

Distribution

A distribution (Distros) is a collection of programs combined with the Linux kernel to make up a Linux-based OS. Example: Red Hat Enterprise Linux, Fedora, Ubuntu, and Gentoo.

Boot loader

The boot loader is a program that boots the OS. Examples: GRUB and ISOLINUX.

Service

A service is a program that runs as a background process. Example: httpd, nfsd, ntpd, ftpd, and named.

X Window System

The X Window System provides the standard toolkit and protocol to build graphical user interfaces on nearly all Linux systems.

Desktop Environment

The desktop environment is a graphical user interface on top of the OS. Example: GNOME, KDE, Xfce, and Fluxbox

Command Line

The command line is an interface for typing commands on top of the OS. It is a text-based interface that allows to enter commands, execute them, and view the results.

Shell

The shell is the command-line interpreter that interprets the command line input and instructs the OS to perform any necessary tasks and commands. Example: bash, tcsh, and zsh.

The trailing

$or#character indicates that the shell is awaiting the input. It also indicates whether Bash recognizes as a normal user (the dollar,$) or as a super user (the hash,#).The shell executes each command by running the first program of the given name found in a directory listed in the

PATHenvironment variable.

What is Shell

Partition

A partition is a logical part of the disk. By dividing the hard disk into partitions, data can be grouped and separated as needed. When a failure or mistake occurs, only the data in the affected partition will be damaged. In contrast, the data on the other partitions will likely survive.

Filesystem

A filesystem is a method for storing and organizing files in Linux. Example: ext3, ext4, FAT, XFS, and Btrfs.

Device files

There are two types of device files:

Block

A block of data that has a finite size, and any particular byte can be accessed at any position in the block.

Disk drives and partitions use block devices

Character

A flow of characters that can be read and written, but we access to a particular position and change arbitrary bytes is not possible.

Mouse, keyboard, and serial ports use character devices.

To check for a paritcular device file, inspect the first letter in the output of ls -l. It would be b for block devices, or c, for character devices.

$ ls -l /dev/m*

crw-rw----+ 1 root video 506, 0 Mar 20 14:32 /dev/media0

crw-rw----+ 1 root video 506, 1 Mar 20 14:32 /dev/media1

crw-rw----+ 1 root video 506, 2 Mar 20 14:32 /dev/media2

crw-r----- 1 root kmem 1, 1 Mar 20 14:32 /dev/mem

brw-rw---- 1 root disk 179, 0 Mar 20 14:32 /dev/mmcblk0

brw-rw---- 1 root disk 179, 1 Mar 20 14:32 /dev/mmcblk0p1

brw-rw---- 1 root disk 179, 2 May 22 15:54 /dev/mmcblk0p2

Linux Development Process

Developers submit the code to the Linux kernel by breaking changes into individual units called patches.

A patch describes the lines that need to be changed, added, or removed from the source code. Each patch can add a new feature, new support for a device, fix a problem, improve performance, or rework code to be more easily understood.

Developers post their patches to the relevant mailing list where other developers can reply with feedback.

When the patch is close to being release-ready, it is accepted by a senior Linux kernel developer or maintainer who manages different kernel sections.

When the maintainer finishes their review (more extensive evaluation), they will sign off on the patch and send it off to the Linux creator and Linux Foundation fellow (Linus Torvalds). Linus has the ultimate authority on what is accepted into the next release.

Linux Families

Linux (at a very high level) refers to the OS Kernel, i.e., the basic program that communicates with the hardware (CPU, memory, and attached devices) and the applications that run on the computer.

There are currently (the year 2021) three major Linux distribution families.

Redhat Family

Red Hat Enterprise Linux (RHEL) heads the family, including CentOS, Fedora, and Oracle Linux.

SUSE Family

SUSE (SUSE Linux Enterprise Server (SLES)) and openSUSE

Uses the RPM-based

zypperpackage manager to install, update, and remove packages in the system.Includes the YaST (Yet Another Setup Tool) application for system administration purposes.

Debian Family

Uses the DPKG-based APT package manager (using

apt,apt-get,apt-cache) to install, update, and remove packages in the system.

Linux Applications

Linux offers a wide variety of Internet applications, such as web browsers, email clients, online media applications.

Web browsers supported by Linux can be either graphical or text-based, such as Firefox, Google Chrome, Epiphany, w3m, lynx.

Linux supports graphical email clients, such as Thunderbird, Evolution, Claws Mail, and text-mode email clients, such as Mutt and mail.

Linux systems provide many other applications for performing Internet-related tasks, such as Filezilla, XChat, Pidgin.

Most Linux distributions offer LibreOffice to create and edit different kinds of documents.

Linux systems offer entire suites of development applications and tools, including compilers and debuggers.

Linux systems offer several

sound players including Amarok, Audacity, and Rhythmbox.

movie players, including VLC, MPlayer, Xine, and Totem.

movie editors, including Kino, Cinepaint, Blender, among others.

The GIMP (GNU Image Manipulation Program) utility is a feature-rich image retouching and editing tool available on all Linux distributions.

Other graphics utilities that help perform various image-related tasks are eog, Inkscape, convert, and Scribus.

Linux Boot Process

The Linux boot process is the procedure for initializing the system. It consists of everything from when the computer power is first switched on until the user interface is fully operational.

Basic Input/Output System (BIOS) starts the boot loader.

Boot loader loads the kernel into memory.

The Kernel mounts disks/partitions and starts the init daemon.

The init daemon starts services based on the run level.

The boot process has multiple steps, starting with BIOS, which triggers the boot loader to start up the Linux kernel. From there, the initramfs filesystem is invoked, which triggers the init program to complete the startup process.

BIOS

Computer is powered on.

POST (Power On Self Test) starts the BIOS stored on a ROM chip on the motherboard, initializes the hardware, including the screen and keyboard, and tests the main memory.

The remainder of the boot process is controlled by the OS.

Master Boot Record (MBR) and Boot Loader

After POST, the system control passes from the BIOS to the boot loader.

The boot loader is usually stored on one of the hard disks in the system.

either in the boot sector (for traditional BIOS/MBR systems) or

the EFI partition ((Unified) Extensible Firmware Interface or EFI/UEFI systems).

Information on date, time, and the most important peripherals are loaded from the CMOS values. CMOS is a battery-powered memory store, which allows the system to keep track of the date and time even when it is powered off.

Several boot loaders exist for Linux; the most common ones are

GRUB (for GRand Unified Boot loader),

ISOLINUX (for booting from removable media), and

DAS U-Boot (for booting on embedded devices/appliances).

Linux boot loaders can present a user interface for choosing alternative options for booting Linux and even other OSs that might be installed.

The boot loader is responsible for loading the kernel image and the initial RAM disk or filesystem into memory. The init RAM disk contains critical files and device drivers needed to start the system.

Boot loader in action

The boot loader has two distinct stages:

First stage

For systems using the BIOS/MBR method

The boot loader resides at the first sector of the hard disk (Master Boot Record (MBR)). The size of the MBR is just 512 bytes.

The boot loader examines the partition table and finds a bootable partition. Once it finds a bootable partition, it searches for the second stage boot loader, GRUB, and loads it into RAM (Random Access Memory).

For systems using the EFI/UEFI method

UEFI firmware reads its Boot Manager data to determine which UEFI application is to be launched and from which disk/partition.

The firmware then launches the UEFI application, for example, GRUB, as defined in the boot entry in the firmware’s boot manager.

Second stage

The second stage boot loader resides under

/boot.A splash screen is displayed, which allows us to choose which OS (OS) to boot.

After choosing the OS, the boot loader loads the kernel of the selected OS into RAM and passes control to it.

Kernels are almost always compressed, so their first job is to uncompress themselves. After this, it will check and analyze the system hardware and initialize any hardware device drivers built into the kernel.

Initial RAM Disk

The

initramfsfilesystem image contains programs and binary files that perform all actions needed to mount the proper root filesystem.The actions include providing kernel functionality for the required filesystem and device drivers for mass storage controllers with a facility called udev (for user device).

udev is responsible for figuring out which devices are present, locating the device drivers they need to operate correctly, and loading them.

After the root filesystem has been found, it is checked for errors and mounted.

The

mountprogram instructs the OS that a filesystem is ready for use and associates it with a particular point in the overall hierarchy of the filesystem (the mount point). If the above is successful, the initramfs is cleared from RAM, and the/sbin/initprogram is executed.inithandles the mounting and pivoting over to the final real root filesystem. If special hardware drivers are needed before the mass storage can be accessed, they must be in theinitramfsimage.

Text-Mode Login

Near the end of the boot process, init starts several text-mode

logins prompts. These enable the user to provide credentials and get a

command shell.

The terminals which run the command shells can be accessed using the ALT

key plus a function key. Most distributions start with six text

terminals and one graphics terminal starting with F1 or F2.

Within a graphical environment, switching to a text console requires

pressing CTRL-ALT + the appropriate function key (with F7 or F1

leading to the GUI).

Kernel, Init and Services

The boot loader loads both the kernel and an initial RAM-based file

system (initramfs) into memory to be used directly by the kernel.

When the kernel is loaded in RAM, it immediately initializes and configures the computer’s memory and configures all the hardware attached. This includes all processors, I/O subsystems, storage devices. The kernel also loads some necessary user-space applications.

/sbin/init and services

Once the kernel has set up all its hardware and mounted the root

filesystem, the kernel runs /sbin/init. This then becomes the

initial process, which then starts other processes to get the system

running. Most other processes on the system ultimately trace their

origin to init; exceptions include the so-called kernel processes.

These are started by the kernel directly, and their job is to manage

internal OS details.

Besides starting the system, init is responsible for keeping the

system running and shutting it down cleanly. init manages the

non-kernel processes; it cleans up after completion and restarts user

login services when users log in/log in out, other background system

services starts/stops.

The process startup was performed using System V variety of UNIX. This serial process has the system passing through a sequence of run levels containing collections of scripts that start and stop services. Each run level supports a different mode of running the system. Within each runlevel, individual services can be set to run or shut down if running.

However, all major recent distributions have moved away from this sequential runlevel method of system initialization, although they usually support the System V conventions for compatibility purposes.

The two main alternatives developed were:

Upstart

Developed by Ubuntu and first included in 2006. Adopted in Fedora 9 (in 2008) and RHEL 6 and its clones.

systemd

Adopted by Fedora first (in 2011). Adopted by RHEL 7 and SUSE. Replaced Upstart in Ubuntu 16.04

Systems with systemd start up faster than those with earlier init

methods. systemd replaces a serialized set of steps with aggressive

parallelization techniques, permitting multiple services to be initiated

simultaneously.

Simpler configuration files (systemd) have replaced complicated

startup shell scripts (System V). systemd provides information on

pre-checks before service starts, how to execute service startup, and

how to determine startup has finished. One thing to note is that

/sbin/init now just points to /lib/systemd/systemd;

i.e. systemd takes over the init process.

Starting, stopping, restarting a service (using nfs as an example)

on a currently running system:

sudo systemctl start|stop|restart nfs.service

Enabling or disabling a system service from starting up at system boot

sudo systemctl enable|disable nfs.service

Listing all services

sudo systemctl list-units --type=service

sudo systemctl --type=service

or show only active services

systemctl list-units --type=service --state=active

systemctl status : Output shows a hierarchical overview of the running services

Each service is represented by a service unit, which is described by a service file usually shipped in

/lib/systemd/system/(or/run/systemd/system/, or/etc/systemd/system/;They are listed by increasing order of importance, and the last one wins). Each is possibly modified by other

service-name.service.d/*.conffiles in the same set of directories.

Sample service file for ssh

$ cat /lib/systemd/system/ssh.service

[Unit]

Description=OpenBSD Secure Shell server

Documentation=man:sshd(8) man:sshd_config(5)

After=network.target auditd.service

ConditionPathExists=!/etc/ssh/sshd_not_to_be_run

[Service]

EnvironmentFile=-/etc/default/ssh

ExecStartPre=/usr/sbin/sshd -t

ExecStart=/usr/sbin/sshd -D $SSHD_OPTS

ExecReload=/usr/sbin/sshd -t

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

RestartPreventExitStatus=255

Type=notify

RuntimeDirectory=sshd

RuntimeDirectoryMode=0755

[Install]

WantedBy=multi-user.target

Alias=sshd.service

Target units represent a desired state that we want to attain in terms of activated units (which means a running service in the case of service units). They exist mainly as a way to group dependencies on other units. When the system starts, it enables the units required to reach the

default.target(which is a symlink tographical.target, and which in turn depends onmulti-user.target). So all the dependencies of those targets get activated during boot.Such dependencies are expressed with the

Wantsdirective on the target unit. We can create a symlink pointing to the dependent unit in the/etc/systemd/system/target-name.target.wants/directory. When we enable a service,systemdadd a dependency on the targets listed in theWantedByentry of the[Install]section of the service unit file. Conversely,systemctldisablefoo.servicedrops the same symlink and thus the dependency.

Linux Runlevels

Linux has six runlevels 0-6. Scripts are contained in /etc/rc[0-6,S].d/.

Each folder contains the scripts followed by either K or S. If the first

letter is K that script is not executed. If S, that script is executed.

/etc/inittab contains the default run level.

I D |

Name |

Description |

|---|---|---|

0 |

Halt |

Shuts down the system |

1 |

Single user Mode |

Mode for administrative tasks. |

2 |

Multi user Mode |

Does not configure network interfaces and does not export networks services |

3 |

Multi user Mode with Net working |

Starts the system normally. |

4 |

Not used/ User-de finable |

For special purposes. |

5 |

Start system normally with display manager (with GUI). |

Same as runlevel 3 + display manager |

6 |

Reboot |

Reboot the system |

Linux Filesystem

Different types of filesystems supported by Linux:

Conventional disk filesystems: ext2, ext3, ext4, XFS, Btrfs, JFS, NTFS, etc.

Flash storage filesystems: ubifs, JFFS2, YAFFS, etc.

Database filesystems

Special purpose filesystems: procfs, sysfs, tmpfs, squashfs, debugfs, etc.

Data Distinctions

Variable vs. Static

Static data include binaries, libraries, documentation, and anything that does not change withoutsystem administrator assistance.

Variable data is anything that may change even without a system administrator’s help.

Linux Directories

/root : (slash-root) is the home directory for the root user.

/home : users home directories.

/etc : system-wide configuration files.

/bin : directories with executable files.

/usr/bin

/usr/local/bin

/lib : shared libraries needed to upport the applications.

/usr/lib

/usr/local/lib

/sbin : directories with executables supposed to be run by the Superuser.

/usr/sbin

/usr/local/sbin

/tmp : temporary directories, watch out as /tmp is, by default, cleaned out on each reboot.

/var/tmp

/usr/share/doc : complete system documentation.

/usr/share/man

/dev : system device files. In Unix, hardware devices are represented as files.

/proc : "virtual" directory containing files through which you can query or tune Linux kernel settings.

/boot : contains the basic files needed to boot the system.

/media : Mount points for removable media such as CDs, DVDs, USB sticks, etc.

/mnt : Temporarily mounted filesystems.

/opt : Optional application software packages.

/run : Run-time variable data, containing information describing the system since it was booted.

/sys : Virtual sysfs pseudo-filesystem giving information about the system and processes running on it.

/var : Variable data that changes during system operation.

/srv : contains site-specific data which is served by this system.

Filesystems are mounted on the mount point that could be simply a directory (which may or may not be empty) where the filesystem is grafted.

/bin

The /bin directory contains executable binaries, essential commands

used to boot the system or in single-user mode, and essential commands

required by all system users, such as cat, cp, ls, mv,

ps, and rm.

/sbin

The /sbin directory is intended for essential binaries related to

system administration, such as fsck and ip. Commands that are

not essential (theoretically) for the system to boot or operate in

single-user mode are placed in the /usr/bin and /usr/sbin

directories.

/proc

/proc are called pseudo-filesystems because they have no permanent

presence anywhere on the disk, and they exist only in memory.

The /proc filesystem contains virtual files (files that exist only

in memory). It includes files and directories that mimic kernel

structures and configuration information. It does not have real files

but runtime system information, e.g., system memory, devices mounted,

hardware configuration, etc. Some necessary entries in /proc are:

/proc/cpuinfo

/proc/interrupts

/proc/meminfo

/proc/mounts

/proc/partitions

/proc/version

/proc has subdirectories, including:

/proc/<Process-ID-#>

/proc/sys

The

/proc/<Process-ID-#>shows a directory for every process running on the system, which contains vital information about it.The

/proc/sysshows a virtual directory containing a lot of information about the entire system, particularly its hardware and configuration.

/dev

The /dev directory contains device nodes, a pseudo-file used by most

hardware and software devices, except for network devices. This

directory is:

Empty on the disk partition when it is not mounted

Contains entries created by the

udevsystem, which creates and manages device nodes on Linux, creating them dynamically when devices are found. The/devdirectory contains items such as:/dev/sda1(first partition on the first hard disk)/dev/lp1(second printer)/dev/random(a source of random numbers).

/var

The /var directory contains files that are expected to change in

size and content as the system is running (var stands for variable),

such as the entries in the following directories:

/var/log : System log files.

/var/lib : Packages and database files

/var/spool : Print queues.

/var/tmp : Temporary files

/var/lock : Lock files used to control simultaneous access to resources

/var/www : Root for website hierarchies

The /var directory may be put on its own filesystem so that the

growth of the files can be accommodated and any exploding file sizes do

not fatally affect the system. Network services directories such as

/var/ftp (the FTP service) and /var/www (the HTTP web service)

are also found under /var.

/etc

The /etc directory is the home for system configuration files. It

contains no binary programs, although there are some executable scripts.

For example, /etc/resolv.conf tells the system where to go on the

network to obtain hostname to IP address mappings (DNS). Files like

passwd, shadow, and group for managing user accounts are

found in the /etc directory.

Note

/etc is for system-wide configuration files, and only the superuser can modify files there. User-specific configuration files are always found under their home directory.

/etc/skel : Contains skeleton files used to populate newly created home directories.

/etc/systemd : Contains or points to configuration scripts for starting, stopping system services when using systemd.

/etc/init.d : Contains startup and shut down scripts when using System V initialization.

/boot

The /boot directory contains the few essential files needed to boot

the system. For every alternative kernel installed on the system, there

are four files:

vmlinuz : The compressed Linux kernel required for booting.

initramfs : The initial ram filesystem, required for booting, sometimes called

initrd, notinitramfs.config : The kernel configuration file, only used for debugging and bookkeeping.

System.map : Kernel symbol table, only used for debugging.

Each of these files has a kernel version appended to its name.

The Grand Unified Bootloader (GRUB) files such as

/boot/grub/grub.conf or /boot/grub2/grub2.cfg are also found

under the /boot directory.

/lib

/lib contains libraries (common code-shared by applications and

needed for them to run) for the essential programs in /bin and

/sbin. These library filenames either start with ld or lib.

For example, /lib/libncurses.so.5.9.

These are dynamically loaded libraries (also known as shared libraries

or Shared Objects (SO)). On some Linux distributions, there exists a

/lib64 directory containing 64-bit libraries, while /lib

contains 32-bit versions.

Kernel modules (kernel code, often device drivers, that can be loaded

and unloaded without restarting the system) is located in

/lib/modules/<kernel-version-number>. PAM (Pluggable Authentication

Modules) files are stored in /lib/security.

Others

/opt: Optional application software packages/sys: Virtual pseudo-filesystem giving information about the system and the hardware. It can be used to alter system parameters and for debugging purposes./srv: Site-specific data served up by the system/tmp: Temporary files; on some distributions erased across a reboot and/or may be a ramdisk in memory/usr: Multi-user applications, utilities, and data/usr/include: Header files used to compile applications./usr/lib: Libraries for programs in /usr/bin and /usr/sbin./usr/lib64: 64-bit libraries for 64-bit programs in /usr/bin and /usr/sbin./usr/sbin: Non-essential system binaries, such as system daemons./usr/share: Shared data used by applications, generally architecture-independent./usr/src: Source code, usually for the Linux kernel./usr/local: Data and programs specific to the local machine. Subdirectories include bin, sbin, lib, share, include, etc./usr/bin: This is the primary directory of executable commands on the system

In Linux, a file’s extension often does not categorize it the way it

might in other OSs. One cannot assume that a file named file.txt is

a text file and not an executable program.

The real nature of a file can be ascertained by using the file

utility. For the file names given as arguments, it examines the contents

and certain characteristics to determine whether the files are plain

text, shared libraries, executable programs, scripts, or something else.

Other Information

File System Superblock

The superblock stores the metadata of the file system, such as

Blocks in the file system

No of free blocks in the file system

Inodes per block group

Blocks per block group

No of times the file system was mounted since last fsck.

Mount time

UUID of the file system

Write time

File System State (i.e., was it cleanly unmounted, errors detected)

The file system type (i.e., whether it is ext2,3 or 4).

The OS in which the file system was formatted

View superblock information

dumpe2fs -h /dev/sda4

dumpe2fs 1.42.9 (4-Feb-2014)

Filesystem volume name: cloudimg-rootfs

Last mounted on: /

Filesystem UUID: f75f9307-27dc-xxxx-87b7-xxxxxxxxx

Filesystem magic number: 0xEF53

Filesystem revision #: 1 (dynamic)

Filesystem features: has_journal ext_attr resize_inode dir_index filetype needs_recovery extent flex_bg sparse_super large_file huge_file uninit_bg dir_nlink extra_isize

Filesystem flags: signed_directory_hash

Default mount options: (none)

Filesystem state: clean

Errors behavior: Continue

Filesystem OS type: Linux

.....sniped.......

du

Estimate file space usage

du

-h : human readable

-x : skip directories on different file system

-c : show the totals

--exclude=PATTERN : exclude files that match PATTERN

Show disk usage

du -xhcx --exclude=proc * : provide the disk usage while excluding proc directory

mkfs

MaKe FileSystem handle formatting. It requires as a parameter, a device file representing the partition to be formatted (for instance, /dev/sdb1). The operation is destructive and will format the partition and create a filesystem.

GUI and Terminal

X Window System

The X Window System (X) is loaded as one of the final steps in the boot process.

A service called the Display Manager keeps track of the displays being provided and loads the X server (so-called, because it provides graphical services to applications, sometimes called X clients). The display manager also handles graphical logins and starts the appropriate desktop environment after a user logs in.

A desktop environment consists of a session manager, which starts and maintains the components of the graphical session, and the window manager, which controls the placement and movement of windows, window title bars, and controls. Although these can be mixed, generally, a set of utilities, session manager, and window manager are used together as a unit and provide a seamless desktop environment.

If the display manager is not started by default in the default run level. The graphical desktop can be started differently, such as logging on to a text-mode console by running

startxfrom the command line. Or, start the display manager (gdm,lightdm,kdm,xdm, etc.) manually from the command line. This differs from runningstartxas the display managers will project a sign-in screen.Logging out through the desktop environment kills all processes in your current X session and returns to the display manager login screen.

gnome-tweak-toolexposes several setting options regarding graphical display.

Current screen resolution

xdpyinfo | grep dim

dimensions: 3200x1080 pixels (847x286 millimeters)

Turn off the graphical desktop

Linux distributions can start and stop the graphical desktop in various ways.

For the newer systemd-based distributions, the display manager is run as

a service. The GUI desktop can be stopped with the systemctl

utility, and most distributions will also work with the telinit

command. telinit can be used to change the SysV system runlevel.

sudo systemctl stop gdm (or sudo telinit 3)

and restart it (after logging into the console) with:

sudo systemctl start gdm (or sudo telinit 5)

On Ubuntu versions before 18.04 LTS, substitute lightdm for gdm.

Terminal Emulator

A terminal emulator program emulates (simulates) a standalone terminal

within a window on the desktop. It is equivalent to logging into the

machine at a pure text terminal with no running graphical interface. The

gnome-terminal application is used to emulate a text-mode terminal in a

window. Other available terminal programs include xterm, rxvt,

konsole (default on KDE), terminator.

Virtual Terminal

Virtual Terminals (VT) are console sessions that use the entire display and keyboard outside of a graphical environment. Such terminals are considered “virtual” because, although there can be multiple active terminals, only one terminal remains visible at a time.

To switch between VTs, press the CTRL-ALT-function key for the VT.

For example, press CTRL-ALT-F6 for VT 6.

Screen Multiplexer

tmux

tmux is a terminal multiplexer: it enables a number of terminals to

be created, accessed, and controlled from a single screen.

tmux new -s myname : start new with session name

tmux list-sessions : show sessions

tmux ls : show sessions

tmux list-windows : show windows

tmux attach-session -t myname : Attach to session named "myname"

tmux a -t myname : Attach to session named "myname"

(Prefix) + d : detach. By default, tmux uses Ctrl b as the prefix key

Windows (Tabs)

(Prefix Key) +

c create window

w list windows

n next window

p previous window

f find window

, name window

& kill window

" split pane horizontally.

% split pane vertically.

arrow key — switch the pane.

Hold Ctrl+b, don't release it, and hold one of the arrow keys — resize the pane.

tmux.conf

# Enable mouse mode

set -g mouse on

Reloading tmux config

Suppose we have made changes to the tmux configuration file in the

~/.tmux.conf file, it shouldn’t be necessary to start the server up

again from scratch with kill-server. Instead, the current tmux session

can reload the configuration with the source-file command. This can

be done either from within tmux, by pressing Ctrl+B or

Prefix key and then : to bring up a command prompt, and typing:

:source-file ~/.tmux.conf

Or simply from a shell:

tmux source-file ~/.tmux.conf

This should apply your changes to the running tmux server without

affecting the sessions or windows within them.

Tmux Copy Paste

For copying,

Press the

Shiftkey; i.e.,Shift-MouseHighlightproperly selects text and - still holding down the shift key; right-click and get the standard bash context menu with Copy, Paste, etc.or

Ctrl-Shift-CandCtrl-Shift-Vdo work to copy and paste the text.

Basic Utilities and Operations

Binary Locations

Depending on the specifics of a particular distribution policy, programs

and software packages are installed in various directories. In general,

executable programs and scripts should live in the /bin,

/usr/bin, /sbin, /usr/sbin directories, or somewhere under

/opt. They can also appear in /usr/local/bin and

/usr/local/sbin.

Command-line Parameters

Most input lines entered at the shell prompt have three essential elements:

Command

Options

Arguments

The command is the name of the program you are executing. It may be

followed by one or more options (or switches) that modify what the

command may do. Options usually start with one or two dashes, such as

-p or --print, to differentiate them from arguments,

representing what the command operates on.

Getting Help

The primary sources of Linux documentation are the man pages, GNU

info, the help options and commands, and a wide variety of

online documentation sources. Further, Eric Raymond’s guidelines on How To Ask Questions The Smart Way is a good way to avoid the most common mistakes and get useful answers.

man

The man program searches, formats, and displays the information

contained in the man page system. The man pages are divided into

chapters numbered 1 through 9.

Commands that can be executed from the command line

System calls (functions provided by the kernel)

Library functions (provided by system libraries)

Devices (on Unix-like systems, these are special files, usually placed in the /dev/ directory)

Configuration files (formats and conventions)

Games

Sets of macros and standards

System administration commands

Kernel routines

With the -a parameter, man will display all pages with the given

name in all chapters, one after the other, as in:

man -a socket

The chapter number can be used to force man to display the page from

a particular chapter.

man 7 socket

whatis : Provides a one-line description of the commands.

man -f : generates the same result as typing whatis.

man -k : generates the same result as typing apropos.

If we do not know the names of the commands. We can use

aproposcommand, which searches manual pages (or more specifically their short descriptions) for any keywords provided.The

aproposcommand then returns a list of manual pages whose summary mentions the requested keywords along with the one-line summary from the manual page.

Example:

$ apropos "copy file"

cp (1) - copy files and directories

cpio (1) - copy files to and from archives

git-checkout-index (1) - Copy files from the index to the working tree

install (1) - copy files and set attributes

ntfscp (8) - copy file to an NTFS volume.

GNU Info

Typing

infowith no arguments in a terminal window displays an index of available topics. The user can browse through the topic list using the regular movement keys: arrows, Page Up, and Page Down.Help for a particular topic can be viewed by typing

info <topic name>. The system then searches for the topic in all available info files.The topic currently viewed on an info page is called a node. Basic keystrokes for moving between nodes are

n(Go to the next node),p(Go to the previous node),u(move one node up in the index).

Nodes are essentially sections and subsections in the documentation. The user can move between nodes or view each node sequentially. Each node may contain menus and linked subtopics or items.

We can also use

pinfoto reach the documentation.

help

Most commands have an available short description which can be viewed

using the --help or the -h option along with the command or

application.

To view a synopsis of these built-in commands, the user can type

help

help

help dirs

Graphical Help System

GNOME:

gnome-helporyelpKDE:

khelpcenter

Package Documentation

Linux documentation is also available as part of the package management system. Usually, this documentation is directly pulled from the upstream source code, but it can also contain information about how the distribution packaged and set up the software.

Such information is placed under the /usr/share/doc directory,

grouped in subdirectories named after each package, perhaps including

the version number in the name.

If the filename extension is of .txt.gz, it can be read using

zcat filename.txt.gz

Locating Applications

which : find out exactly where the program resides on the filesystem.

whereis : locate the binary, source, and manual page files for a command.

Exploring Filesystem and Directories

cd

pwd : Displays the present working directory

cd ~ or cd : Change to your home directory (shortcut name is ~ (tilde))

cd .. : Change to parent directory (..)

cd - : Change to previous directory (- (minus))

The cd command remembers the last directory and allows it to reach

there with cd -. For remembering more than just the previous

directory visited,

use

pushdto change the directory instead ofcd; this pushes the starting directory onto a list.using

popdwill then send back to those directories, walking in reverse order (the most recent directory will be the first one retrieved withpopd).The list of directories is displayed with the

dirscommand.

tree

tree : get a bird's-eye view of the filesystem tree.

-d : Use tree -d to view just the directories and to suppress listing file names.

ls

ls : list files

-F : append indicator (one of */=>@|) to entries

find .can also be used to list the files in the current directory iflsis somehow blocked in restricted shells.

ls showing full path

ls -R /path | awk '/:$/&&f{s=$0;f=0} /:$/&&!f{sub(/:$/,"");s=$0;f=1;next} NF&&f{ print s"/"$0 }'

Creating and deleting files and directories

mv : Rename a file, directory.

rm : Remove a file.

rm -f : Forcefully remove a file.

rm -i : Interactively remove a file.

rm -rf : Remove a directory and all of its contents recursively.

mkdir : mkdir is used to create a directory

rmdir : Removing a directory. The directory must be empty or the command will fail.

Creating a simple file

The editor command starts a text editor (such as Vi or Nano) and allows creating, modifying, and reading text files.

echo

echo can be used to display a string on standard output (i.e. the

terminal) or to place in a new file (using the > operator) or append

to an already existing file (using the >> operator).

echo string

The -e option, along with the following switches, is used to enable

special character sequences, such as the newline character or horizontal

tab.

\n represents newline

\t represents a horizontal tab.

echo is handy for viewing the values of environment variables

(built-in shell variables). For example, echo $USER will print

the name of the user who has logged into the current terminal.

If a file needs to be created without using an editor, there are two standard ways to create one from the command line and fill it with content.

The first is to use echo repeatedly:

echo line one > myfile

echo line two >> myfile

echo line three >> myfile

cat

The second way is to use cat combined with redirection:

cat << EOF > myfile

> line one

> line two

> line three

> EOF

Editing text files using Vi

Typing

vimtutorlaunches a short but very comprehensive tutorial for those who want to learn their firstvicommands.A binary visual editor (bvi) can be used to binary files.

Open file with vi

vi <filename> - Open a file to edit in Vi editor.

Vi Modes

Three modes

Command,

Insert, and

line.

Command Mode

By default, vi starts in command mode. Each key is an editor

command. Keyboard strokes are interpreted as commands that can modify

file contents.

Cursor Positions

h,l,j,k - Move left, right, down, up

w - Move to the start of the next word.

e - Move to the end of the word.

b - Move to the beginning of the word.

3w - 3w is similar to pressing w 3 times, moves to the start of the third word.

30i-'EscKey' - 30(insert>-(EscapeKey> : Inserts 30 - at once.

f - find and move to the next (or previous) occurrence of a character. fo find next o.

3fo - find the third occurrence of o

% - In the text that is structured with parentheses or brackets, ( or { or [, use % to jump to the matching parenthesis or bracket.

0 (Zero) - Reach beginning of the line

$ - Reach end of the line.

- - Find the next occurrence of the word under the cursor

# - Find the previous occurrence of the word under the cursor

gg - Reach the beginning of the file

H - Reach the beginning of the file

L - Reach the end of the file

G - Reach the end of the file

30G - Reach the 30th line in the file

. - Repeat the last command

z= - If the cursor is on the word (highlighted with spell check), vim will suggest a list of alternatives that it thinks may be correct.

Searching text in vi

/text - Search for the text. Utilize n, N for next and previous occurrences.

?text - Search backward for pattern

Working with text in vi

o - Insert a new line below the cursor

O - Insert a new line above the cursor

x - Delete the character

r - replace the character with the next key pressed.

cw - change the current word (puts the vi in insert mode)

dw - Delete the current word.

dd - Delete the current line.

d$ - Delete the text from where your cursor is to the end of the line.

dnd - Delete n lines.

yy - Yank or copy the current line.

y$, yny - Similar to delete lines.

p - Paste the line in the buffer into text after the currentline.

u -

Insert Mode

Type i or a to switch to Insert mode from Command mode. Insert

mode is used to enter (insert) text into a file. Insert mode is

indicated by an ? INSERT ? indicator at the bottom of the screen.

Press Esc to exit Insert mode and return to Command mode.

Line Mode

Type : to switch to the Line mode from Command mode. Each key is an

external command, including writing the file contents to disk or

exiting.

Press Esc to exit Line mode and return to Command mode.

:q - Quit.

:q! - Quit even modifications have not been saved

:w - write to the file

:w filename - write out to the filename

:w! filename - overwrite filename

:x or :wq - Save and close.

:syntax on - Turn on Syntax highlighting for C programming and other languages.

:history - Shows the history of the commands executed

:set number - Turn on the line numbers.

:set nonumber - Turn off the line numbers.

:set spell spelllang=en_us - Turn spell checking on with spell language as "en_us"

:set nospell - Turn spell checking off

:set list - If 'list' is on, whitespace characters are made visible. The default displays "^I" for each tab, and "$" at each EOL (end of line, so trailing whitespace can be seen)

:u - Undo one change.

:%!xxd - to turn it into a hex-editor.

:%!xxd -r - to go back to normal mode (from hexedit mode)

:%!fmt - format the text using the fmt command

Using external commands in vi

:!{cmd} - Run the command without exiting the vim. {cmd} can be whoami without external brackets.

Vi Configuration Files

Two configurations files which are important:

.vimrc

It contains optional runtime configuration settings to initialize

vim when it starts. Example: If you want vim to have syntax on

and line numbers on, whenever you open vi, enter syntax on and

set number in this file.

##Sample contents of .vimrc

syntax on

set number

A good details about various options which can be set in vimrc can be found at A Good Vimrc

.viminfo

viminfo file stores command-line, search string, input-line history,

and other stuff. Useful if you want to find out what the user has been

doing in vi.

Both files are present in the user home directory.

Replace text in Vi

:s/test/learn - would replace test to learn in current line but only first instance.

:s/test/learn/g - would replace test to learn in current line all the instance.

:s/test/learn/gi - would replace test (all cases) to learn in current line all the instance.

:%s/test/learn/gi - would replace test to learn in the file (all lines)

Other Info

Vim Awesome provides Awesome VIM plugins from across the universe. A few good one are

The NERD tree : Tree explorer plugin for vim

:NERDTreeToggle : Toggle the NERD Tree :NERDTreeFocus : Set the focus to NerdTree

Syntastic : Syntax checking hacks for vim

SyntasticCheck - Check for the possible syntax issuesYoucompleteme : Code-completion engine for Vim

fzf : Bundle of fzf-based commands and mappings

GFiles [OPTS] : Git files (git ls-files) GFiles? : Git files (git status) History : v:oldfiles and open buffers History: : Command history History/ : Search history Snippets : Snippets (UltiSnips) Commits : Git commits (requires fugitive. vim) BCommits : Git commits for the current buffer Commands : Commands

UltiSnips The ultimate snippet solution for Vim

Tabular : Vim script for text filtering and alignment

Select the text which you want to align in the visual mode (Do make sure that the cursor is also at the same position as visual) :Tabularize /{pattern to be aligned}

Utilize Vundle, the plugin manager for vim

:PluginList - lists configured plugins :PluginInstall - installs plugins; append `!` to update or just:PluginUpdate :PluginSearch foo - searches for foo; append `!` to refresh local cache :PluginClean - confirms removal of unused plugins; append `!` to auto-approve removal

Manipulating Text

cut - remove sections from each line of files

cut OPTION... [FILE]...

-d : use DELIM instead of TAB for field delimiter.

-f : select only these fields.

sed

sed (stream editor) is used to modify the contents of a file or

input stream, usually placing the contents into a new file or output

stream.

Data from an input source/file (or stream) is taken and moved to a working space. The entire list of operations/modifications is applied over the data in the working space, and the final contents are moved to the standard output space (or stream).

sed -e command <filename>

Specify editing commands at the command line, operate on file and put the output on standard out (e.g. the terminal)

sed -f scriptfile <filename> : Specify a scriptfile containing sed commands, operate on file, and put output on standard out

sed s/pattern/replace_string/ file : Substitute first string occurrence in every line

sed s/pattern/replace_string/g file : Substitute all string occurrences in every line

sed 1,3s/pattern/replace_string/g file : Substitute all string occurrences in a range of lines

sed -i s/pattern/replace_string/g file : Save changes for string substitution in the same file

Example: To convert 01/02/… to JAN/FEB/…

sed -e 's/01/JAN/' -e 's/02/FEB/' -e 's/03/MAR/' -e 's/04/APR/' -e 's/05/MAY/' \

-e 's/06/JUN/' -e 's/07/JUL/' -e 's/08/AUG/' -e 's/09/SEP/' -e 's/10/OCT/' \

-e 's/11/NOV/' -e 's/12/DEC/'

Example: Search for all instances of the user command interpreter

(shell) equal to /sbin/nologin in /etc/passwd and replace them

with /bin/bash.

To get output on standard out (terminal screen):

sed s/'\/sbin\/nologin'/'\/bin\/bash'/g /etc/passwd

or to direct to a file:

sed s/'\/sbin\/nologin'/'\/bin\/bash'/g /etc/passwd > passwd_new

Note that this is painful and obscure because we are trying to use the forward-slash ( / ) as both a string and a delimiter between fields. One can do instead:

sed s:'/sbin/nologin':'/bin/bash':g /etc/passwd

where we have used the colon ( : ) as the delimiter instead. (You

are free to choose your delimiting character!) In fact, when doing this,

we do not even need the single quotes:

bitvijays/tmp> sed s:/sbin/nologin:/bin/bash:g /etc/passwd

Example

sed -i '/^$/d' : is used to delete empty lines in a file using the -i option to edit the file in-place.

awk

awk is an interpreted programming language, typically used as a data

extraction and reporting tool. awk is used to extract and then print

specific contents of a file and is often used to construct reports.

awk `command' file : Specify a command directly at the command line

awk -f scriptfile file : Specify a file that contains the script to be executed

The input file is read one line at a time, and, for each line, awk

matches the given pattern in the given order and performs the requested

action. The -F option allows specifying a particular field separator

character. For example, the /etc/passwd file uses : to separate

the fields, so the -F: option is used with the /etc/passwd file.

The command/action in awk needs to be surrounded with apostrophes (or

single-quote (‘)). awk can be used as follows:

awk '{ print $0 }' /etc/passwd : Print entire file

awk -F: '{ print $1 }' /etc/passwd : Print first field (column) of every line, separated by a space

awk -F: '{ print $1 $7 }' /etc/passwd : Print first and seventh field of every line

Awk converting to normal output to csv

A B --> "A","B"

awk '{print "\"" $1 "\",\"" $2"\""}'

sort

According to a sort key, sort is used to rearrange the lines of a

text file, in either ascending or descending order. It can also sort

with respect to particular fields (columns) in a file. The default sort

key is the order of the ASCII characters (i.e., essentially

alphabetically).

sort <filename> : Sort the lines in the specified file according to the characters at the beginning of each line

cat file1 file2 | sort : Combine the two files, then sort the lines and display the output on the terminal

sort -r <filename> : Sort the lines in reverse order

sort -k 3 <filename> : Sort the lines by the 3rd field on each line instead of the beginning

When used with the -u option, sort checks for unique values after

sorting the records (lines). It is equivalent to running uniq on the

output of sort.

uniq

uniq removes duplicate consecutive lines in a text file and helps

simplify the text display.

Because uniq requires that the duplicate entries must be

consecutive, one often runs sort first and then pipes the output

into uniq; if sort is used with the -u option, it can do all

this in one step.

To remove duplicate entries from multiple files at once, use the following command:

sort file1 file2 | uniq > file3

or

sort -u file1 file2 > file3

To count the number of duplicate entries, use the following command:

uniq -c filename

paste

paste can combine fields (such as name or phone number) from

different files and combine lines from multiple files. For example,

paste can combine line 1 from file1 with line 1 of file2, line 2

from file1 with line two of file2, and so on.

Let’s assume there are two files; one contains the full name of all employees, and another contains their phone numbers and Employee IDs. We want to create a new file that contains all the data listed in three columns: name, employee ID, and phone number.

paste can be used to create a single file containing all three

columns. The different columns are identified based on delimiters

(spacing used to separate two fields). For example, delimiters can be a

blank space, a tab, or an Enter.

paste accepts the following options:

-d delimiters : specify a list of delimiters to be used instead of tabs for separating consecutive values on a single line. Each delimiter is used in turn; when the list has been exhausted, paste begins again at the first delimiter.

-s : causes paste to append the data in series rather than in parallel; that is, in a horizontal rather than vertical fashion.

To paste contents from two files:

paste file1 file2

The syntax to use a different delimiter is as follows:

paste -d, file1 file2

Common delimiters are space, tab, |, comma, etc.

join

join combines lines from two files based on a common field. It works

only if files share a common field.

Suppose we have two files with some similar columns. We have saved employees’ phone numbers in two files, one with their first name and the other with their last name. We want to combine the files without repeating the data of common columns. How do we achieve this?

The above task can be achieved using join, which is essentially an

enhanced version of paste. It first checks whether the files share

common fields, such as names or phone numbers, and then joins the lines

in two files based on a common field.

To combine two files on a common field,

join file1 file2

split

split is used to break up (or split) a file into equal-sized

segments for easier viewing and manipulation and is generally used only

on relatively large files. By default, split breaks up a file into

1000-line parts. The original file remains unchanged, and a set of new

files with the same name plus an added prefix is created. By default,

the x prefix is added.

split a file into segments:

split infile

split a file into segments using a different prefix

split infile <Prefix>

We will apply split to an American-English dictionary file of over 99,000 lines:

wc -l american-english

99171 american-english

We have used wc (word count) to report the number of lines in the

file. Then, typing:

split american-english dictionary

will split the American-English file into 100 equal-sized segments named dictionaryxx.

strings

strings is used to extract all printable character strings found in

the file or files given as arguments. It is useful in locating

human-readable content embedded in binary files.

For example, to search for the string my_string in a spreadsheet:

strings book1.xls | grep my_string

tr

tr translates characters, copies standard input to standard output,

and handles special characters. The tr utility is used to translate

specified characters into other characters or to delete them. The

general syntax is as follows:

tr [options] set1 [set2]

The items in the square brackets are optional.

tr abcdefghijklmnopqrstuvwxyz ABCDEFGHIJKLMNOPQRSTUVWXYZ : Convert lower case to upper case

tr '{}' '()' < inputfile > outputfile : Translate braces into parenthesis

echo "This is for testing" | tr [:space:] '\t' : Translate white-space to tabs

echo "This is for testing" | tr -s [:space:] : squeeze repetition of characters using -s

echo "the geek stuff" | tr -d 't' : Delete specified characters using -d option

echo "my username is 432234" | tr -cd [:digit:] : Complement the sets using -c option

tr -cd [:print:] < file.txt : Remove all non-printable character from a file

tr -s '\n' ' ' < file.txt : Join all the lines in a file into a single line

tee

tee takes the output from the command, and one stream is displayed

on the standard output, and the other is saved to a file.

For example, to list the contents of a directory on the screen and save the output to a file.

ls -l | tee newfile

cat newfile will then display the output of ls –l.

wc

wc (word count) counts the number of lines, words, and characters in

a file or list of files.

wc

-l : Displays the number of lines

-c : Displays the number of bytes

-w : Displays the number of words

cut

cut is used for manipulating column-based files and is designed to

extract specific columns. The default column separator is the tab

character. A different delimiter can be given as a command option.

For example, to display the third column delimited by a blank space.

ls -l | cut -d " " -f3

alias

We can create customized commands or modify the behavior of already

existing ones by creating aliases. These aliases are placed in

~/.bashrc file, so they are available to any command shells.

unalias removes an alias.

Typing alias with no arguments will list currently defined aliases.

Viewing Files

echo

echo can be used to read files.

echo *: List the current folder files.echo */*: List all the files in the next sub-folders level.echo .*: List hidden files.echocan also be used to read filesecho "$( < filename.txt )"

cat

cat, short for concatenate, is used to read, print, and combine

files.

cat : Used for viewing files that are not very long; it does not provide any scroll-back.

When

catsees the string-as a filename, it treats it as a synonym forstdin.To get around this, we need to alter the string that

catsees in such a way that it still refers to a file called-.The usual way of doing this is to prefix the filename with a path

-./-, or/home/username/-.This technique is also used to get around similar issues where command-line options clash with filenames, so a file referred to as

./-edoes not appear as the-ecommand-line option to a program.

The

taccommand (catspelled backward) prints the lines of a file in reverse order. Each line remains the same, but the order of lines is inverted. The syntax oftacis the same as for thecat, as in:tac file tac file1 file2 > newfile

xxd

Make a hexdump or do the reverse.

-g bytes : number of octets per group in normal output. Default 2

-l len : stop after len octets.

-s [+][-]seek : start at seek bytes abs. (or +: rel.) infile offset.

hexdump

Display file contents in hexadecimal, decimal, octal, or ascii.

-s, --skip <offset> skip offset bytes from the beginning

View large files

less

less can be used to view the contents of a large file requiring

scrolling up and down page by page, without the system having to place

the entire file in memory before starting. This is much faster than

using a text editor.

head

head reads the first few lines of each named file (10 by default)

and displays it on standard output. The number of lines to read can be

provided in an option.

For example, if we need to print the first 5 lines from grub.cfg,

use the following command:

head -n 5 grub.cfg

head -5 grub.cfg

tac : used to look at a file backward, starting with the last line.

less : print information one per page.

more : prints information one per page.

head : prints first 10 lines

tail : prints last 10 lines.

tail

tail prints the last few lines of each named file and displays it on

standard output. By default, it shows the last 10 lines. The number of

lines can be provided in an option. tail is handy when

troubleshooting any issue using log files as the user probably wants to

see the most recent output lines.

For example, to display the last 15 lines of somefile.log, use the following command:

tail -n 15 somefile.log

tail -15 somefile.log

To continually monitor new output in a growing log file:

tail -f somefile.log

Viewing compressed files

Reading compressed files for many commonly-used file and text

manipulation programs requires different associated utilities having the

letter “z” prefixed to their name. For example, we have utility programs

such as zcat, zless, zdiff and zgrep.

Here is a table listing some z family commands for gzip

compression method:

zcat compressed-file.txt.gz

zless somefile.gz

zmore somefile.gz : To page through a compressed file

zgrep -i less somefile.gz : To search inside a compressed file

zdiff file1.txt.gz file2.txt.gz : To compare two compressed files

There is bzcat and bzless associated with bzip2 compression

method, and xzcat and xzless associated with xz compression

method.

Searching Files

locate

find files by name. locate utilizes a database created by

updatedb. The database is automatically once a day and can be

updated manually by running updatedb.

Examples:

locate yum : locate a file named yum

locate .doc : locate files with .doc extension

find

find recurses down the filesystem tree from any particular directory

(or set of directories) and locates files that match specified

conditions. The default pathname is always the present working

directory.

When no arguments are provided,

findlists all files in the current directory and all of its subdirectories.findis being able to run commands on the files that match your search criteria using-execoption.

find / -name somename

-user : File is owned by user uname (numeric user ID allowed).

-group : File belongs to group gname (numeric group ID allowed).

-size : File uses n units of space. c/k/M/G: bytes/Kilobytes/Megabytes/Gigabytes.

-name : Base of filename

-type : d for directory, f for file

-ctime X : is when the inode metadata (i.e., file ownership, permissions, etc.) last changed; it is often, but not necessarily, when the file was first created.

-atime X : search for accessed/last read

-mtime X : modified/last written. The X is the number of days and can be expressed as either a number (n) that means exactly that value, +n, which means greater than that number, or -n, which means less than that number. Similar options for times in minutes (as in -cmin, -amin, and -mmin)

-size Y : find files based on size. Size is in 512-byte blocks, by default; We can also specify bytes (c), kilobytes (k), megabytes (M), gigabytes (G). Example +10M

Example: Remove all files that end with .swp

find -name "*.swp" -exec rm {} ;

The {} (squiggly brackets) is a placeholder that will be filled with

all the file names that result from the find expression, and the

preceding command will be run on each one individually.

The command needs to be ended with either ‘;’ (including the single-quotes) or “;”.

We can also use the -ok option, which behaves the same as -exec,

except that find will prompt for permission before executing the

command (a good way to test your results before blindly executing any

potentially dangerous commands).

Example: Delete empty file and directories

find -empty -type d -delete

find -empty -type f -delete

Find each file in the current directory and tell its type and grep JPEG files.

find . -type f -exec file {} + | grep JPEG

Searching for text using Global Regular Expression Print (grep)

grep searches text files and data streams for patterns and can be

used with regular expressions.

Ways to provide input to grep

search a given file or files on a system (including a recursive search through sub-folders).

grep bitvijays /etc/passwdGrep also accepts inputs (usually via a pipe) from another command or series of commands.

cat /etc/passwd | grep bitvijays

Syntax

grep [options] [regexp] [filename]

-i, --ignore-case : 'it DoesNt MatTTer WhaT thE CAse Is'

-v, --invert-match : 'everything , BUT that text'

-A NUM : Print NUM lines of trailing context after matching lines.

-B NUM : Print NUM lines of trailing context before matching lines.

-C NUM : Print additional (leading and trailing) context lines before and after the match.

-a, --text : Process a binary file as if it were text; this is equivalent to the --binary-files=text option.

-w : Whole-word search

-L --files-without-match : which outputs the names of files that do NOT contain matches for your search pattern.

-l --files-with-matches : which prints out (only) the names of files that do contain matches for your search pattern.

-H (pattern> filename : Print the filename for each match.

example: grep -H 'a' testfile

testfile:carry out few cyber-crime investigations

Now, let's run the search a bit differently:

cat testfile | grep -H 'a'

(standard input):carry out few cyber-crime investigations

Using regular expressions

Note

Regular expression should be enclosed in single quotation marks or double quotes (allows environment variables to be used), to prevent the shell (Bash or others) from trying to interpret and expand the expression before launching the grep process.

grep 'v.r' testfile

thank you very much

In the search above, . is used to match any single character -

matches “ver” in “very”.

A regular expression may be followed by one of several repetition operators:

The period (

.) matches any single character.?means that the preceding item is optional, and if found, will be matched at the most, once.-means that grep will match the preceding item zero or more times.+means that grep will match the preceding item one or more times.Matching with times

{n}means the preceding item is matched exactly n times,{n,}means the item is matched n or more times.{n,m}means that the preceding item is matched at least n times, but not more than m times.{,m}means that the preceding item is matched, at the most, m times.

Todo

Examples of Matching with times

grep -e / grep -E

Matcher Selection

-E, --extended-regexp : Interpret PATTERN as an extended regular expression.

Matching Control

-e PATTERN, --regexp=PATTERN : Use PATTERN as the pattern. If this option is used multiple times or is combined with the -f (--file) option, search for all patterns given. This option can be used to protect a pattern beginning with “-”.

Example:

grep -E '^[0-9a-zA-Z]{30,}'

Grep anything that starts with a string containing characters from

0-9,a-z or A-Z and has matched 30 or more times.

Search a specific string

Scan files for a text present in them.

Find a way to scan the entire Linux system for all files containing a specific string of text. Just to clarify, we are looking for text within the file, not in the file name.

grep -rnw -e "pattern" --include={-.c,-.h} --exclude=-.o 'directory'

-r : search recursively

-n : print line number

-w : match the whole word.

--include={-.c,-.h} : Only search through the files which have .c or .h extensions.

--exclude=-.o : Exclude searching in files with .o extensions.

Note

--exclude or --include parameter could be used for efficient searching.

Line and word anchors

The

^anchor specifies that the pattern following it should be at the start of the line:grep '^th' testfile this

The

$anchor specifies that the pattern before it should be at the end of the line.grep 'i$' testfile Hi

The operator

<anchors the pattern to the start of a word.grep '\<fe' testfile carry out few cyber-crime investigations

>anchors the pattern to the end of a word.grep 'le\>' testfile is test file

The

b(word boundary) anchor can be used in place of<and>to signify the beginning or end of a word:grep -e '\binve' testfile carry out few cyber-crime investigations

Shell expansions - input to Grep

If we don’t single-quote the pattern passed to Grep, the shell could

perform shell expansion on the pattern and feed a changed pattern to

grep.

grep "$HOME" /etc/passwd

root:x:0:0:root:/root:/bin/bash

We used double quotes to make the Bash shell replace the environment

variable $HOME with the variable’s actual value (in this case,

/root). Thus, grep searches the /etc/passwd file for the

text /root, yielding the two lines that match.

grep `whoami` /etc/passwd

root:x:0:0:root:/root:/bin/bash

Here, back-tick expansion is done by the shell, replacing whoami

with the user name (root) that is returned by the whoami command.

Compressing Files

gzip, bzip2, xz and zip are used to compress files.

tar allows you to create or extract files from an archive file,

often called a tarball. tar can optionally compress while creating

the archive, and decompress while extracting its contents.

gzip: The most frequently used Linux compression utility.bzip2: Produces files significantly smaller than those produced bygzip.xz: The most space-efficient compression utility used in Linux.zip: required to examine and decompress archives from other OSs.tar: thetarutility is often used to group files in an archive and then compress the whole archive at once.

tar

Archiving utility

tar

-c : create archive

-t : list the content of the file

-x : extract the files

-j : bzip2 format

-z : gzip format

tar xvf mydir.tar : Extract all the files in mydir.tar into the mydir directory

tar xvf mydir.tar.gz : Extract all the files in mydir.tar.gz into the mydir directory

tar zcvf mydir.tar.gz mydir : Create the archive and compress with gzip

tar jcvf mydir.tar.bz2 mydir : Create the archive and compress with bz2

tar Jcvf mydir.tar.xz mydir : Create the archive and compress with xz

gzip

gzip * : Compresses all files in the current directory; each file is compressed and renamed with a .gz extension

gzip -r projectX : Compresses all files in the projectX directory, along with all files in all of the directories under projectX

gunzip foo : De-compresses foo found in the file foo.gz. Under the hood, the gunzip command is the same as gzip –d

bzip

bzip2 * : Compresses all of the files in the current directory and replaces each file with a file renamed with a .bz2 extension

bunzip2 *.bz2 : Decompresses all of the files with an extension of .bz2 in the current directory. Under the hood, bunzip2 is the same as calling bzip2 -d

xz

xz * : Compresses all of the files in the current directory and replaces each file with a .xz extension

xz foo : Compresses foo into foo.xz using the default compression level (-6), and removes foo if compression succeeds

xz -dk bar.xz : Decompresses bar.xz into bar and does not remove bar.xz even if decompression is successful

xz -dcf a.txt b.txt.xz > abcd.txt : Decompresses a mix of compressed and uncompressed files to standard output, using a single command

xz -d *.xz : Decompresses the files compressed using xz

zip

zip backup * : Compresses all files in the current directory and places them in the backup.zip

zip -r backup.zip ~ : Archives your login directory (~) and all files and directories under it in backup.zip

unzip backup.zip : Extracts all files in backup.zip and places them in the current directory

Backing up data

Backing up the date can be done in multiple ways. A simple way is by

simply copying with cp or using more robust rsync. Both can be

used to synchronize entire directory trees. However, rsync is more

efficient, as it checks if the file being copied already exists. If the

file exists and there is no change in size or modification time,

rsync will avoid an unnecessary copy and save time. Furthermore,

because rsync copies only the parts of files that have changed, it

can be very fast.

cp

cp can only copy files to and from destinations on the local machine

(unless copying to or from a filesystem mounted using NFS).

cp (SOURCE> (DIRECTORY>

-r : recursive.

-a : similar to preserve,

-p : preserve

-v : verbose.

rsync

rsync can be used to copy files from one machine to another.

Locations are designated in the target:path form, where target can

be in the form of someone@host. The someone@ part is optional

and used if the remote user is different from the local user.

rsync is very efficient when recursively copying one directory tree

to another, because only the differences are transmitted over the

network. One often synchronizes the destination directory tree with the

origin, using the -r option to recursively walk down the directory

tree copying all files and directories below the one listed as the

source.

For example, a useful way to back up a project directory might be to use the following command:

rsync -r project-X someone@host:archives/project-X

Note

rsync can be very destructive! Accidental misuse can do a lot of harm to data and programs by inadvertently copying changes to where they are not wanted. Take care to specify the correct options and paths. It is recommended that first test the rsync command using the -dry-run option to ensure that it provides the desired results.

To use rsync at the command prompt, type rsync sourcefile destinationfile, where either file can be on the local machine or a networked machine; rsync will copy the contents of sourcefile to destinationfile.

rsync --progress -avrxH --delete sourcedir destdir

Comparing files with diff

diff : compare files line by line.

-c Provides a listing of differences that include three lines of context before and after the lines differing in content

-r Used to recursively compare subdirectories, as well as the current directory

-i Ignore the case of letters

-w Ignore differences in spaces and tabs (white space)

-q Be quiet: only report if files are different without listing the differences

To compare two files, at the command prompt, type

diff [options] <filename1> <filename2>. diff is meant to be used for

text files; for binary files, one can use cmp.

patch is a useful tool in Linux. Many modifications to source code

and configuration files are distributed with patch files. They contain

the deltas or changes from an old version of a file to the new version.

A patch file contains the deltas (changes) required to update an

older version of a file to the new one. The patch files are actually

produced by running diff with the correct options:

diff -Nur originalfile newfile > patchfile

To apply a patch, the user can just do either of the two methods below:

patch -p1 < patchfile

patch originalfile patchfile

The first usage is more common. It is often used to apply changes to an

entire directory tree, rather than just one file, as in the second

example. To understand the use of the -p1 option and many others,

see the man page for the patch.

Identifying Users

whoami : Identify the current user

who : list the currently logged-on users

who -a : provide more detailed information

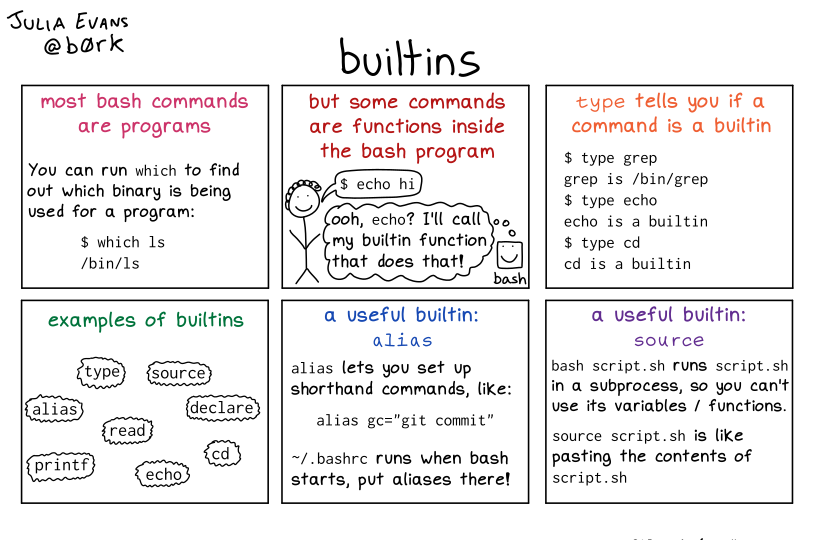

Builtins

Builtins

Other commands

nm-applet : a applet for the network manager.

wc : print newline, word, and byte counts for each file.

-c : print the bytes count.

-l : print the lines count.

-w : print the word count.

sort : sort lines of text files.

cal : Display calendar

date : Display date. Date command provides multiples options for displaying day and time, very helpful in creating backups with name having time and date.

tr : Converts from smaller to uppercase. tr stands for translate.

-d : delete characters in the text.

tee : saves output in file as well as forward it.

touch : Create zero byte files, mainly used for changing the timestamps of the file.

make : If your program source file name is test.c/cpp, then you can directly write make test, this would compile the test.c/cpp program. Remember this it's a faster way.

stat : View detailed information about a file, including its name, size, last modified date and permissions.

uniq : Report or omit repeated lines.

-c : prefix lines by the number of occurrences. (--count)

free : displays information on memory